K8S微服务项目部署及ELK与Filebeat日志收集实现

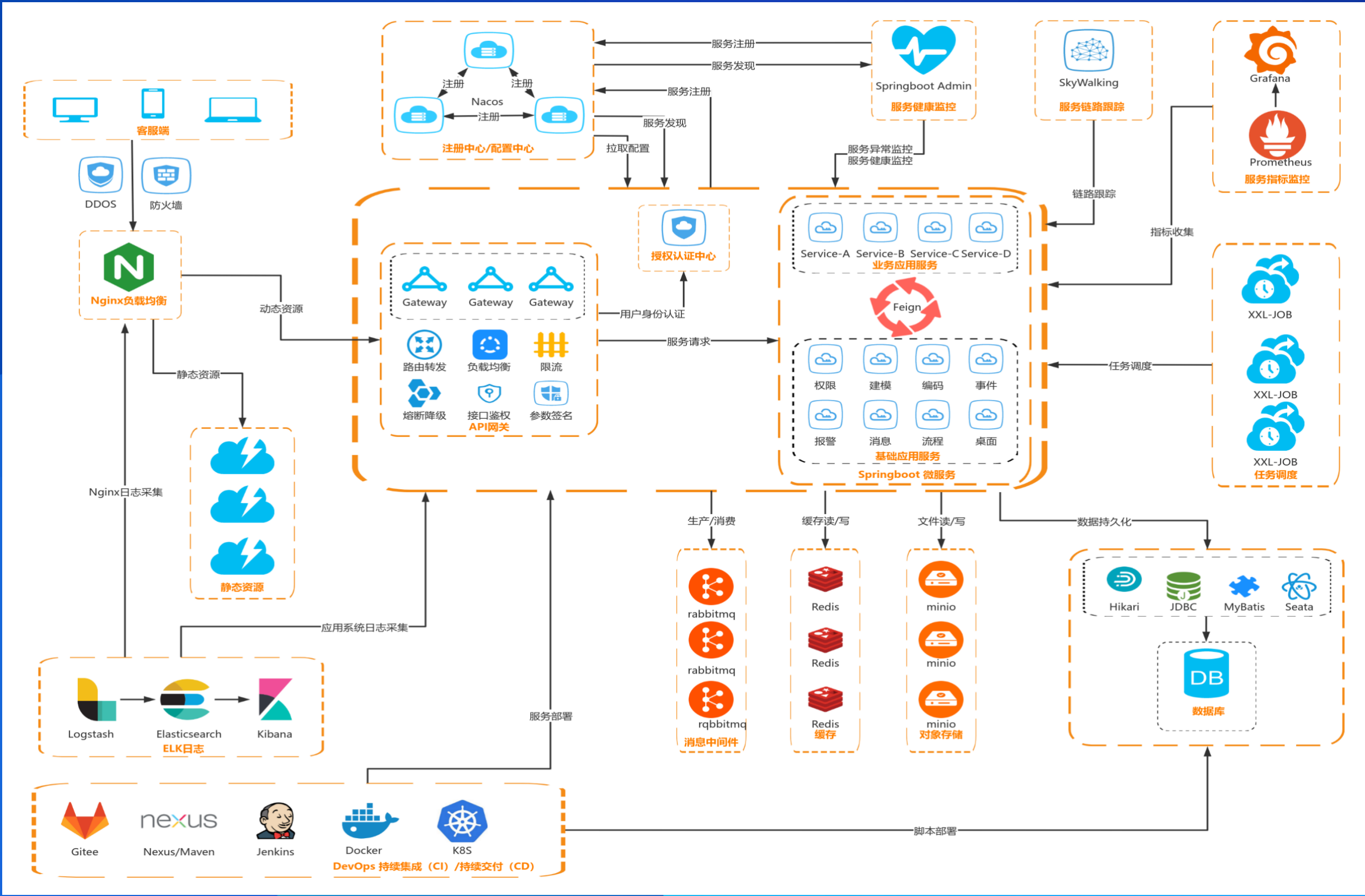

一、介绍如何在Kubernetes(K8S)环境中部署微服务项目,并通过ELK(Elasticsearch、Logstash、Kibana)与Filebeat实现日志的高效收集和管理。通过此实践,可以了解K8S部署的基本流程,以及ELK与Filebeat在日志收集中的应用,从而提升系统监控和问题排查的能力。

二、记录再自动化发布spring boot项目过程中及Kubernetes使用过程中的一些坑点与解决步骤

系统架构

前端:vue3、element plus、taro

后端:springboot3、jdk21、springcloud alibaba

服务器:centos7

服务器清单

| ip | 用途 |

|---|---|

| 192.168.18.197 | K8s-master |

| 192.168.18.198 | K8s-node1 |

| 192.168.18.199 | K8s-node2 |

| 192.168.18.191 | K8s-node3 |

| 192.168.18.190 | K8s-rancher、jenkins |

| 192.168.18.69 | elk、sky日志服务器 |

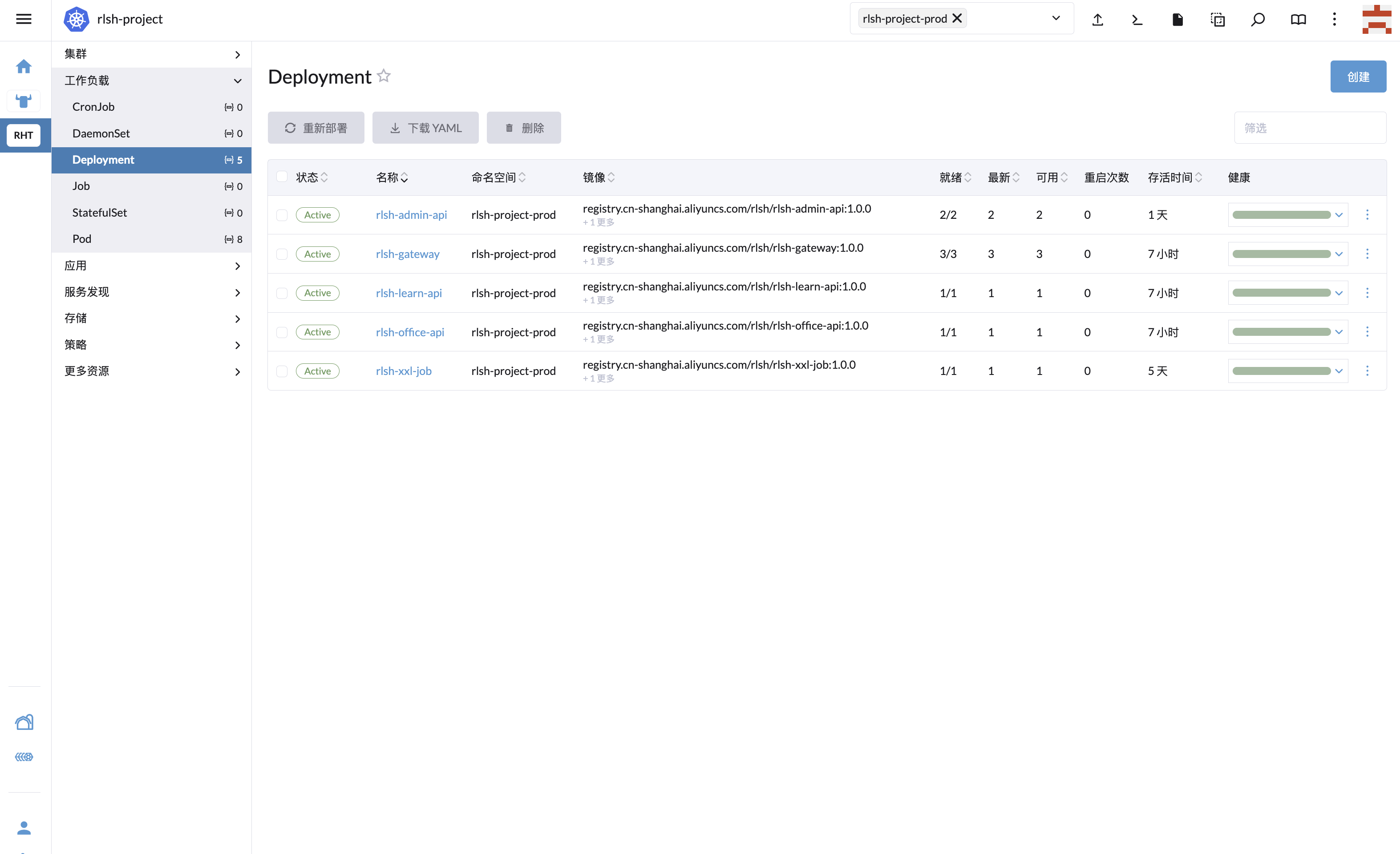

成果展示

架构设计

k8s 部署 springboot 项目

1、创建项目空间

kubectl create namespace rlsh-project-prod2、创建私有密钥

(注意后面的-n 指定命名空间,否则无法生效!!!!!!!)

kubectl create secret docker-registry registry-secret --docker-server=registry.cn-shanghai.aliyuncs.com --docker-username=username --docker-password=password -n rlsh-project-prod3、创建 filebeat configmap

在同一目录下创建 filebeat.yml 执行:kubectl create configmap -n rlsh-project-prod filebeat-config --from-file=filebeat.yml=filebeat.yml

# vim filebeat.yml

# kubectl create configmap -n rlsh-project-prod filebeat-config --from-file=filebeat.yml=filebeat.yml

filebeat.inputs:

- type: log

paths:

- /logs/debug-*.log

multiline:

pattern: '^\d{4}-\d{2}-\d{2}'

negate: true

match: after

max_lines: 2000

timeout: 2s

fields:

logtopic: "${KAFKA_LOG_NAME}-debug"

evn: prod

- type: log

paths:

- /logs/info-*.log

multiline:

pattern: '^\d{4}-\d{2}-\d{2}'

negate: true

match: after

max_lines: 2000

timeout: 2s

fields:

logtopic: "${KAFKA_LOG_NAME}-info"

evn: prod

- type: log

paths:

- /logs/error-*.log

multiline:

pattern: '^\d{4}-\d{2}-\d{2}'

negate: true

match: after

max_lines: 2000

timeout: 2s

fields:

logtopic: "${KAFKA_LOG_NAME}-error"

evn: prod

- type: log

paths:

- /logs/druid-*.log

multiline:

pattern: '^\d{4}-\d{2}-\d{2}'

negate: true

match: after

max_lines: 2000

timeout: 2s

fields:

logtopic: "${KAFKA_LOG_NAME}-druid"

evn: prod

output.kafka:

hosts: ["${KAFKA_HOST1}","${KAFKA_HOST2}"]

topic: '%{[fields.logtopic]}'

partition.round_robin:

reachable_only: false

required_acks: 1

compression: gzip

max_message_bytes: 10000004、k8s 部署文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: rlsh-gateway

namespace: rlsh-project-prod

labels:

app: rlsh-gateway

spec:

replicas: 3

selector:

matchLabels:

app: rlsh-gateway

template:

metadata:

labels:

app: rlsh-gateway

spec:

imagePullSecrets:

- name: registry-secret

containers:

- name: rlsh-gateway

image: registry.cn-shanghai.aliyuncs.com/*** */:1.0.0

imagePullPolicy: Always

volumeMounts:

- name: timezone

mountPath: /etc/localtime

- name: app-logs

mountPath: /logs

ports:

- containerPort: 17073

env:

- name: JAVA_OPTS

value: '-Xms256M -Xmx512M'

- name: filebeat

image: docker.elastic.co/beats/filebeat:8.13.3

volumeMounts:

- name: app-logs

mountPath: /logs

- name: timezone

mountPath: /etc/localtime

- name: config-volume

mountPath: /usr/share/filebeat/filebeat.yml

subPath: filebeat.yml

env:

- name: FILEBEAT_LOG_PATH

value: "/logs/*.log"

- name: KAFKA_HOST1

value: "192.168.18.241:9092"

- name: KAFKA_HOST2

value: "192.168.18.190:9092"

- name: KAFKA_LOG_NAME

value: "rlsh-prod-gateway-log"

volumes:

- name: timezone

hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

- name: app-logs

emptyDir: { }

- name: config-volume

configMap:

name: filebeat-config

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 15、k8s 创建 service 与 ingress

vim deploy-service.yml kubectl apply -n rlsh-prod-project deploy-service.yml

# 创建Pod的Service

apiVersion: v1

kind: Service

metadata:

name: rlsh-gateway

namespace: rlsh-project-prod

spec:

ports:

- port: 80

protocol: TCP

targetPort: 17073

selector:

app: rlsh-gateway

---

# 创建Ingress,定义访问规则,一定要记得提前创建好nginx ingress controller

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: rlsh-gateway-ingress

namespace: rlsh-project-prod

spec:

ingressClassName: nginx

rules:

- host: xxx.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: rlsh-gateway

port:

number: 806、自动发布脚本

一下文件路径根据自身情况修改 vim deploy.sh

#!/bin/bash

# 定义部署文件的路径

DEPLOYMENT_FILE="/mnt/project/deploy/rlsh-gateway/deploy/prod/deploy-k8s.yml"

SERVICE_FILE="/mnt/project/deploy/rlsh-gateway/deploy/prod/deploy-service.yml"

LOG_FILE="/mnt/project/deploy/rlsh-gateway/deploy/prod/deployment.log"

# 定义部署的名称和命名空间(确保这些与你的部署文件中的配置相匹配)

DEPLOYMENT_NAME="rlsh-gateway"

NAMESPACE="rlsh-project-prod"

# 检查 Deployment 是否存在

kubectl get deployment $DEPLOYMENT_NAME -n $NAMESPACE &> /dev/null

if [ $? -eq 0 ]; then

echo "Deployment exists, performing rollout restart..." >> $LOG_FILE

# Deployment 存在,执行 rollout restart

kubectl rollout restart deployment/$DEPLOYMENT_NAME -n $NAMESPACE >> $LOG_FILE 2>&1

# 等待部署稳定

kubectl rollout status deployment/$DEPLOYMENT_NAME -n $NAMESPACE --timeout=120s >> $LOG_FILE 2>&1

else

echo "Deployment does not exist, applying configurations..." >> $LOG_FILE

# Deployment 不存在,使用 apply 创建

kubectl apply -f $DEPLOYMENT_FILE >> $LOG_FILE 2>&1

# 等待部署稳定

kubectl rollout status deployment/$DEPLOYMENT_NAME -n $NAMESPACE --timeout=120s >> $LOG_FILE 2>&1

fi

# 应用服务配置

echo "Applying service configuration..." >> $LOG_FILE

kubectl apply -f $SERVICE_FILE >> $LOG_FILE 2>&1

echo "Deployment process completed." >> $LOG_FILEvim start.sh

#!/bin/bash

sh /mnt/project/deploy/rlsh-gateway/deploy/prod/deploy.sh > /mnt/project/deploy/rlsh-gateway/deploy/prod/start.log 2>&1 &sh start.sh

常用命令

logstash 配置文件

配置文件这边分为原版与优化版,优化版通过 logstash 的正则匹配统一消费 kafka 日志。使文件更简化、高效

配置文件(原版)

input {

beats {

type => "logs"

port => "5044"

}

kafka{

bootstrap_servers => ["192.168.18.241:9092,192.168.18.190:9092"]

group_id => "logstash-es-prod"

auto_offset_reset => "latest"

consumer_threads => 5

decorate_events => "true"

topics => ["rlsh-prod-gateway-log-debug"]

type => "rlsh-prod-gateway-debug"

}

kafka{

bootstrap_servers => ["192.168.18.241:9092,192.168.18.190:9092"]

group_id => "logstash-es-prod"

auto_offset_reset => "latest"

consumer_threads => 5

decorate_events => "true"

topics => ["rlsh-prod-gateway-log-info"]

type => "rlsh-prod-gateway-info"

}

kafka{

bootstrap_servers => ["192.168.18.241:9092,192.168.18.190:9092"]

group_id => "logstash-es-prod"

auto_offset_reset => "latest"

consumer_threads => 5

decorate_events => "true"

topics => ["rlsh-prod-gateway-log-error"]

type => "rlsh-prod-gateway-error"

}

kafka{

bootstrap_servers => ["192.168.18.241:9092,192.168.18.190:9092"]

group_id => "logstash-es-prod"

auto_offset_reset => "latest"

consumer_threads => 5

decorate_events => "true"

topics => ["rlsh-prod-gateway-log-druid"]

type => "rlsh-prod-gateway-druid"

}

kafka{

bootstrap_servers => ["192.168.18.241:9092,192.168.18.190:9092"]

group_id => "logstash-es-prod"

auto_offset_reset => "latest"

consumer_threads => 5

decorate_events => "true"

topics => ["rlsh-prod-admin-log-debug"]

type => "rlsh-prod-admin-log-debug"

}

kafka{

bootstrap_servers => ["192.168.18.241:9092,192.168.18.190:9092"]

group_id => "logstash-es-prod"

auto_offset_reset => "latest"

consumer_threads => 5

decorate_events => "true"

topics => ["rlsh-prod-admin-log-info"]

type => "rlsh-prod-admin-log-info"

}

kafka{

bootstrap_servers => ["192.168.18.241:9092,192.168.18.190:9092"]

group_id => "logstash-es-prod"

auto_offset_reset => "latest"

consumer_threads => 5

decorate_events => "true"

topics => ["rlsh-prod-admin-log-error"]

type => "rlsh-prod-admin-log-error"

}

kafka{

bootstrap_servers => ["192.168.18.241:9092,192.168.18.190:9092"]

group_id => "logstash-es-prod"

auto_offset_reset => "latest"

consumer_threads => 5

decorate_events => "true"

topics => ["rlsh-prod-admin-log-druid"]

type => "rlsh-prod-admin-log-druid"

}

}

filter {

json {

source => "message"

target => "parsed_message"

}

mutate {

remove_field => ["parsed_message[@timestamp]", "parsed_message[@metadata]"]

}

}

output {

stdout {

codec => rubydebug

}

if [type] =~ /^rlsh-prod/ {

elasticsearch {

hosts => "elasticsearch:9200"

action => "index"

codec => json

index => "%{type}-%{+YYYY.MM.dd}"

user => "elastic"

password => "elastic"

# 通过嗅探机制进行es集群负载均衡发日志消息

sniffing => true

# logstash默认自带一个mapping模板,进行模板覆盖

template_overwrite => true

}

}

if [type] == 'rlsh-project-prod-admin-api1' {

elasticsearch {

hosts => "elasticsearch:9200"

action => "index"

codec => json

index => "%{type}-%{+YYYY.MM.dd}"

user => "elastic"

password => "elastic"

}

}

}配置文件(优化版)

input {

beats {

type => "logs"

port => "5044"

}

kafka {

bootstrap_servers => "192.168.18.241:9092,192.168.18.190:9092"

group_id => "logstash-es-prod"

auto_offset_reset => "latest"

consumer_threads => 5

decorate_events => "true"

topics_pattern => "^rlsh-prod.*"

}

}

filter {

json {

source => "message"

target => "parsed_message"

}

mutate {

add_field => { "type" => "%{[parsed_message][fields][logtopic]}" }

}

}

output {

stdout {

codec => rubydebug

}

if [type] =~ /^rlsh-prod/ {

elasticsearch {

hosts => "elasticsearch:9200"

action => "index"

codec => json

index => "%{type}-%{+YYYY.MM.dd}"

user => "elastic"

password => "elastic"

sniffing => true

template_overwrite => true

}

}

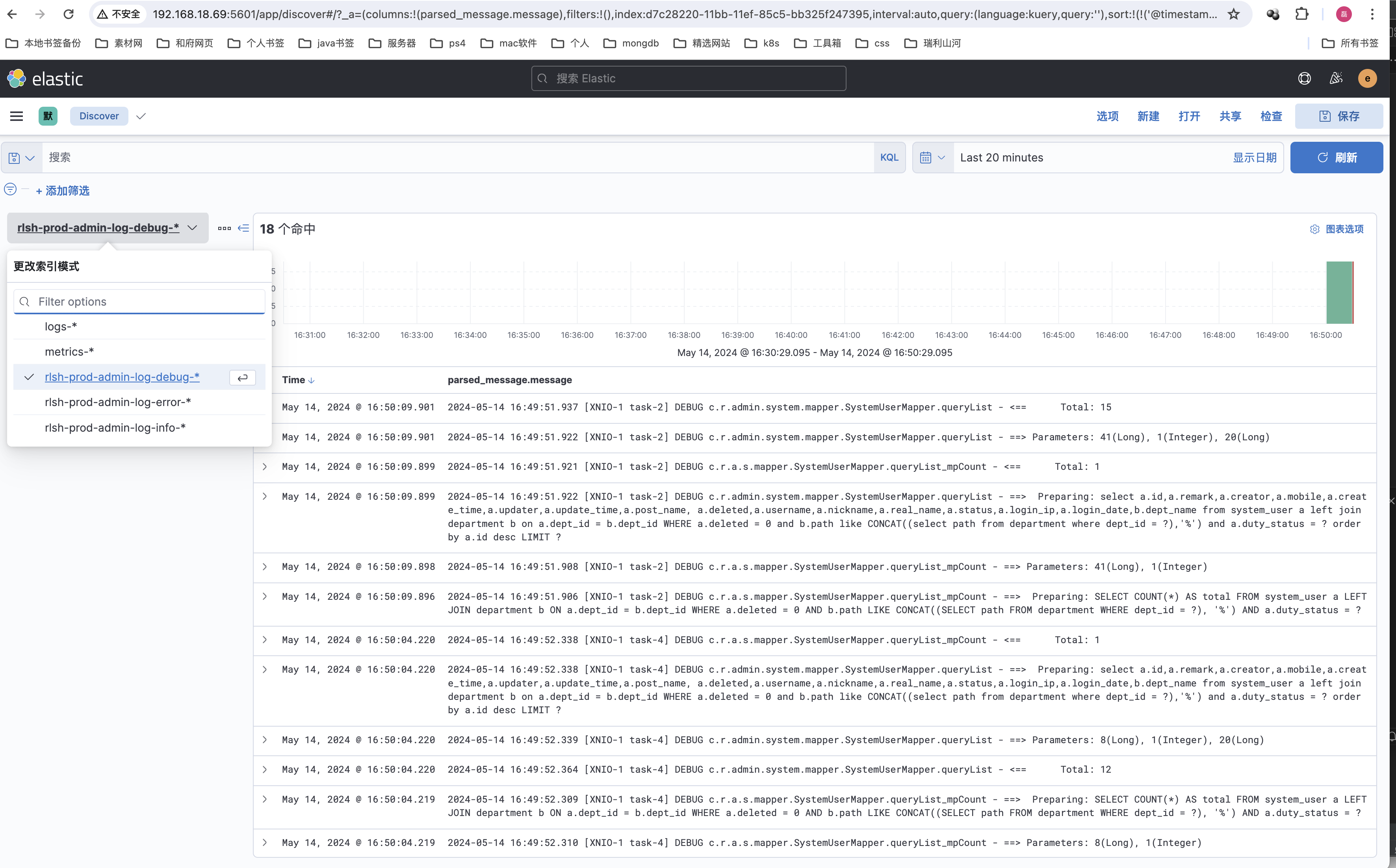

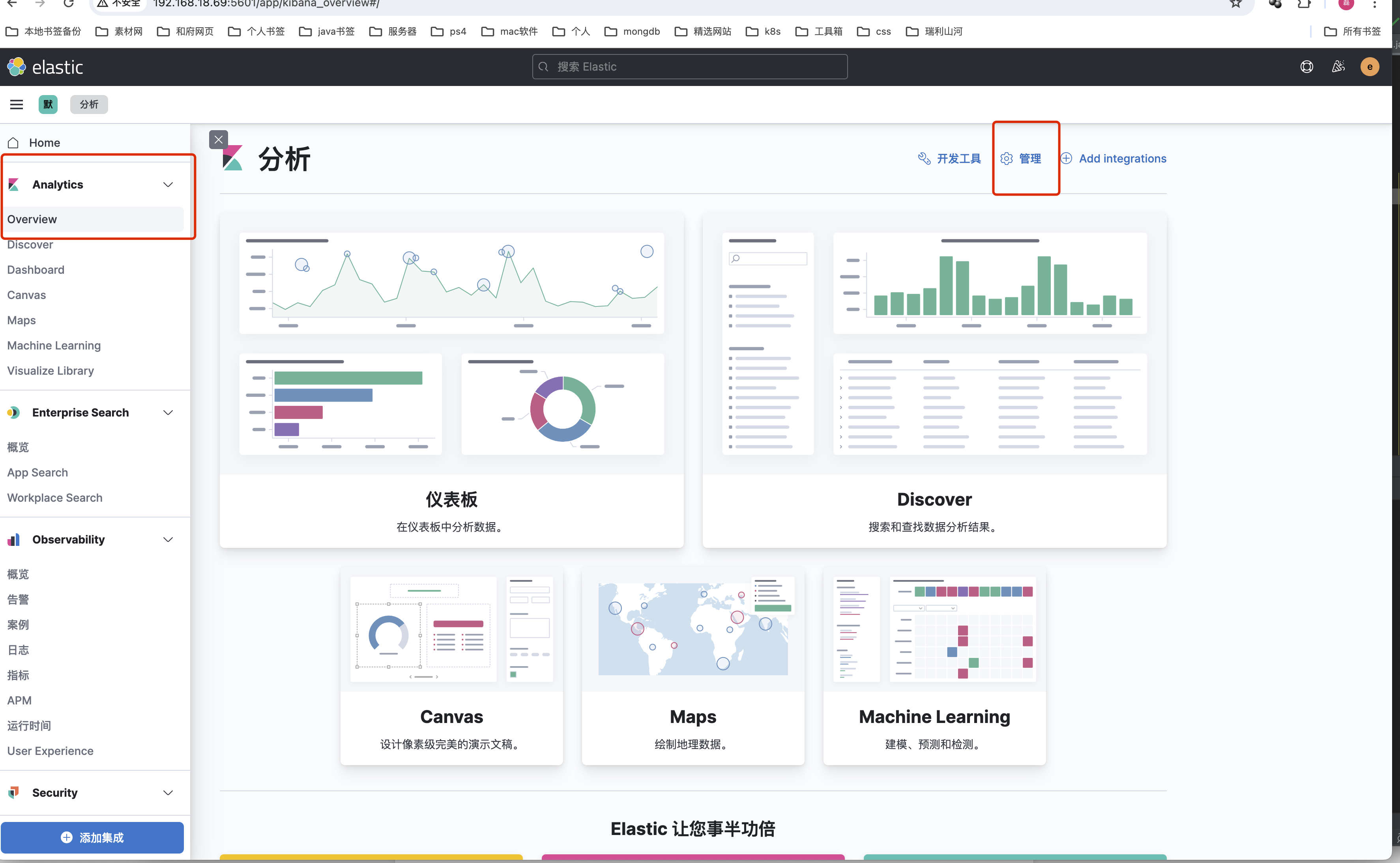

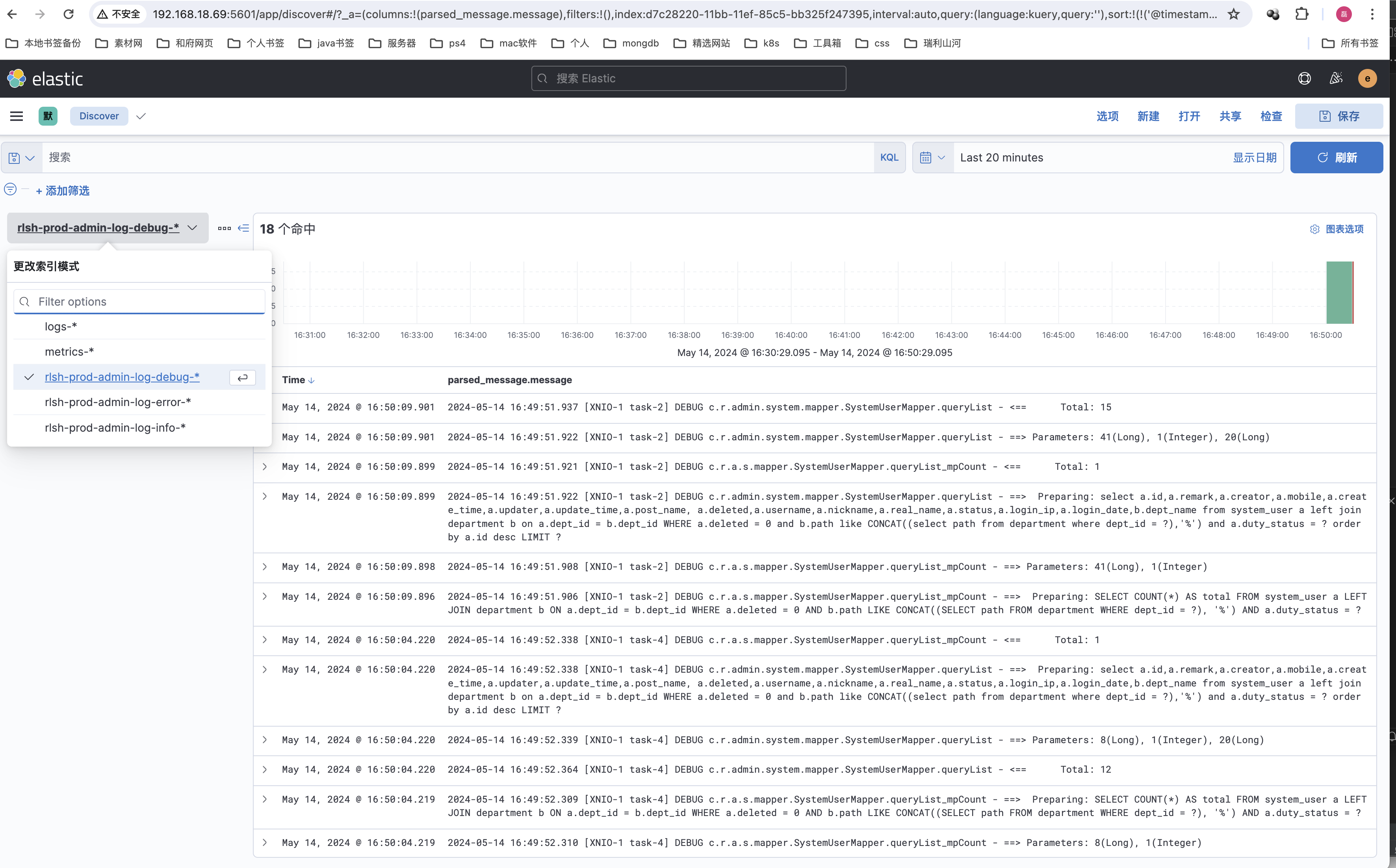

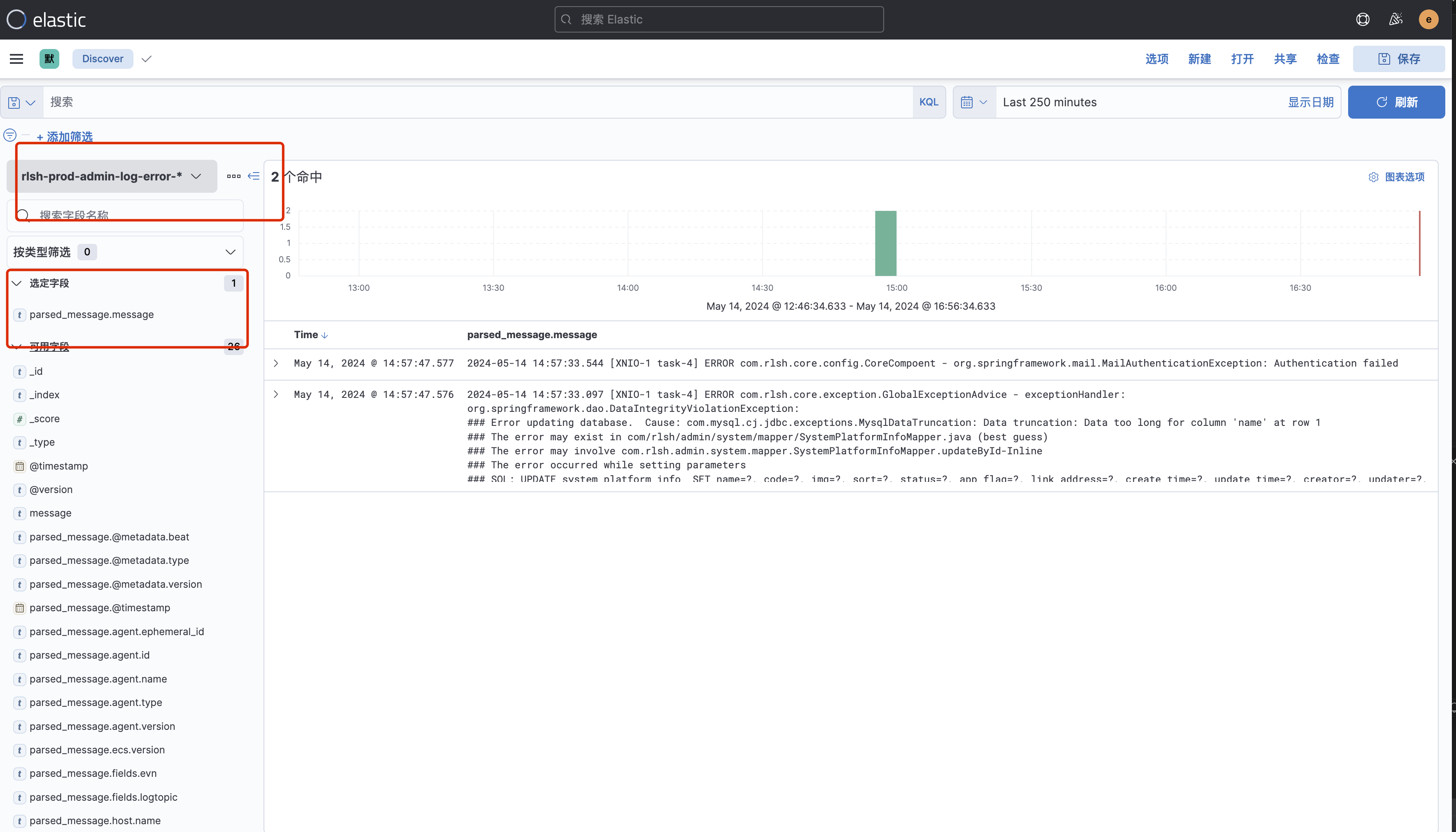

}最终效果

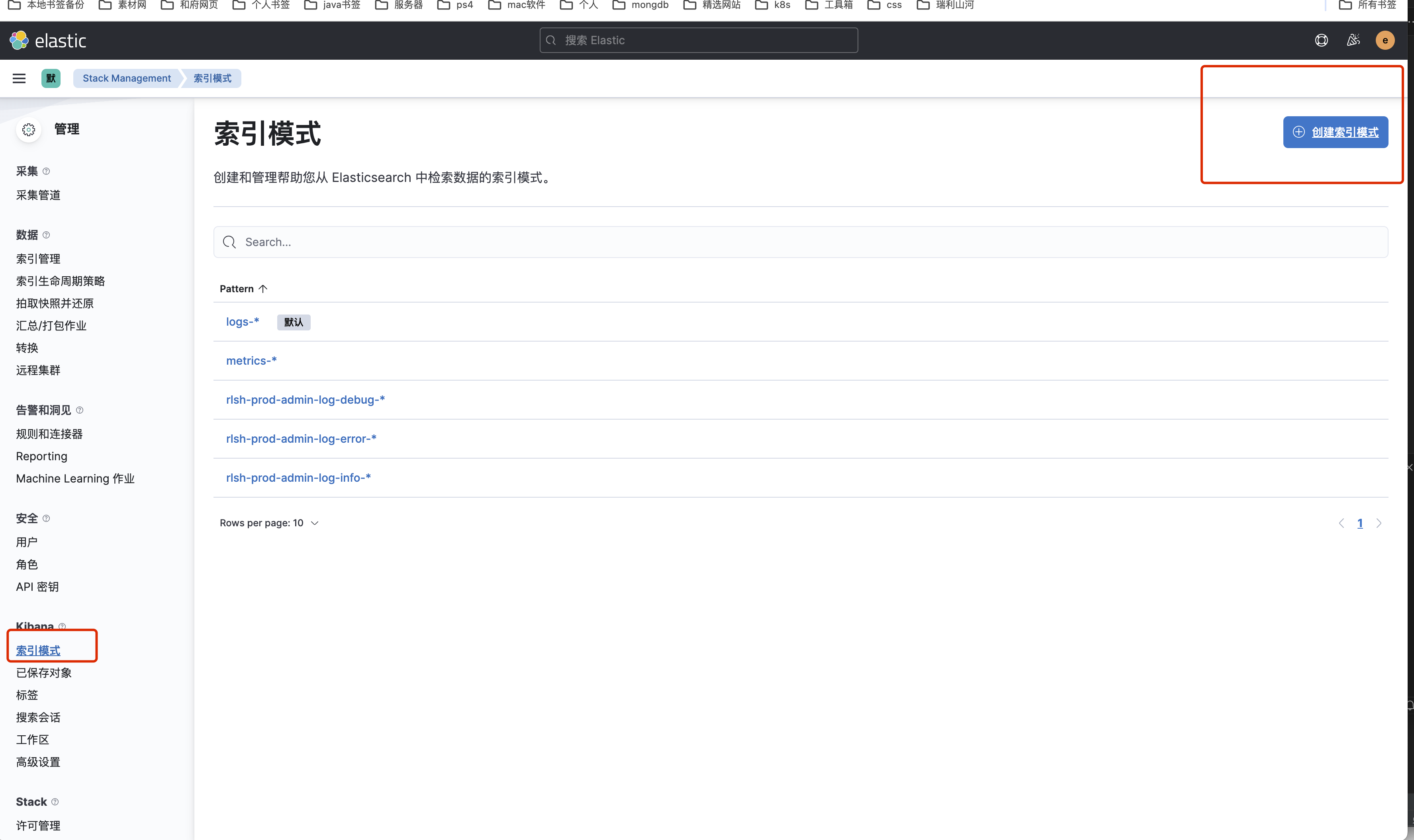

添加索引模式

踩坑点

坑点1:以上命令(部署k8s服务、创建码云私有密钥)等,均需要指定为同一命名空间。否则出问题很难排查

坑点2:nginx-ingress存在缓存,更新了ingress服务(重新创建)后,最好重启下 nginx-ingress

kubectl rollout restart deployment ingress-nginx-controller -n ingress-nginx坑点3: k8s 中的springnboot项目无法通过域名访问阿里云res数据库,需要将 阿里云rds 提供的链接域名改为ip(放入ip转换网站可获取),具体如何解决未找到解决方案

在坑点5中解决

坑点4、创建 nginx-inngress后需要将本地的 host调整为 部署nginnx

坑点5、无法访问公网域名问题(ping baidu.com,ping aliyun.com)等

最好在安装k8s之前就将dns的地址修改为114.114.114.114 或者 223.5.5.5(阿里云的)

ip addr

vi /etc/sysconfig/network-scripts/ifcfg-ens192

# ens33替换为你网卡名称

BOOTPROTO=static #dhcp改为static(修改)

ONBOOT=yes #开机启用本配置,一般在最后一行(修改)

IPADDR=192.168.18.202

#默认网关,如果是虚拟机桥接模式,就填路由器的网关即可,一般都是.1

GATEWAY=192.168.18.1

#子网掩码(增加)

NETMASK=255.255.255.0

#DNS 配置,

DNS1=223.5.5.5

DNS2=223.6.6.6

service network restart解决方案:参考:https://blog.imdst.com/k8s-podwu-fa-jie-xi-wai-wang-yu-ming-de-wen-ti/ 步骤:

1、在master上修改 /etc/resolv.conf

vim /etc/resolv.conf

# Generated by NetworkManager

#nameserver 192.168.18.1 (这个为原始的内网ip,将它注释。新增下面的ip)

nameserver 114.114.114.1142、在node节点上对应加上 nameserver 114.114.114.114 节点

vim /etc/resolv.conf

nameserver 114.114.114.1143、在maset上重启dnspod

kubectl delete pod -l k8s-app=kube-dns -n kube-system

# 验证dns状态

kubectl get pods -n kube-system -l k8s-app=kube-dns

# 测试 DNS 解析

kubectl exec -it rlsh-admin-api-58f894cfcd-4xkqm -n rlsh-project-prod -- nslookup rm-***.mysql.rds.aliyuncs.com4、需要注意,以上dns配置,再重启电脑后会重置,需要重新配置。阻止重置解决方案参考如下:

# 192 对应网卡地址 通过 ifconfig 查看

vi /etc/sysconfig/network-scripts/ifcfg-ens192

# 将dns信息修改为114.114.114.114坑点六:创建pod非常慢

在使用rancher监控kubernetes及自动化发布java服务器过程中,发现总有几个节点无法正常创建java pod。一开始以为是阿里云镜像问题,通过在服务器上手动pull镜像后再重新发布,问题依旧存在,后来发现在全部命名空间下有很多由于网络问题无法正常构建的helm相关的pod。通过在服务器上手动安装helm相关的镜像后,再次重新发布,发现问题解决了 所以怀疑是因为有很多未完成的pod构建任务导致无法正常构建java pod镜像

ps: 应该是因为之前不小心在rancher界面上点了安装helm插件,导致一直再重试,阻塞了其他应用的构建,这个问题困扰了大概有2个星期,中间尝试了各种方法,总算解决了。 附上构建sprngboot docker镜像优化步骤,主要是充分利用docker镜像在pull过程中的缓存机制,通过将lib包复制出来,增加缓存层解决提升构建速度